In the previous article, we discussed what’s currently happening in the relationship between AI companies and publishers, specifically focusing on digital publishers.

We evaluated how the 3C’s of consent, credit and compensation are likely working their way into those partnerships, both through the lens of training and inference.

In this article, we will continue that conversation by discussing why that model doesn’t work well with books, and why it’s a problem worth solving.

Book publisher content licensing for usage with AI companies

We’ve talked a lot about training and inference in this ebook, and by now, I hope you’re beginning to understand the implications of each.

Here’s another huge one.

Experts estimate that the AI inference market will be 100x the size of the AI training market.

This is a massive issue because in their current format, books are best positioned to train AI, and not for AI inference; whereas digital publishers, due to their inherent structural differences, are well-positioned to participate in both training and inference opportunities.

This issue is compounded by the fact that there’s reason to believe the days of AI companies paying to license content to train their models will be short-lived.

Consider Meta’s (Facebook) approach to AI.

Where companies like Google, OpenAI, and Microsoft have built closed-source LLMs, Meta has made their line of LLMs (called Llama) open-source.

If you’re unfamiliar with the two terms, it’s fundamentally the difference between making software private (closed-source) versus public (open-source).

As you might imagine, if Meta makes their AI open source and free to use, Meta has to rely on different sources of data to train their AI, as they don’t have the same budget to purchase content as OpenAI has done.

Meta CEO Mark Zuckerberg recently stated that creators overestimate the value of their work in training AI, and they won’t pay to license content to train their AI.

He claims that there are enough other public resources available for training AI, and if creators don’t want them to use their content, they just won’t use it.

Plain and simple.

On the one hand, it may be comforting to know that book IP may not be as critical as some may say to the training of AI.

On the other hand, since books are best positioned to participate in training AI, it means unless something changes, they could completely miss out on the much larger inference opportunity AI represents.

It’s early to make the estimate, but if it turns out the market for training data dries up like Mark Zuckerberg estimates, Inference will turn out to be an infinitely larger opportunity, not just 100x larger than the AI training market.

However, for the sake of discussion, let’s assume AI companies will continue to pay to license data for Training, and discuss why these partnerships need to look different from digital publishers.

We’ve had to cover a lot of ground to get here, but we’re finally arriving at the core issues we came here to talk about.

Why are books best positioned for training AI and not inference?

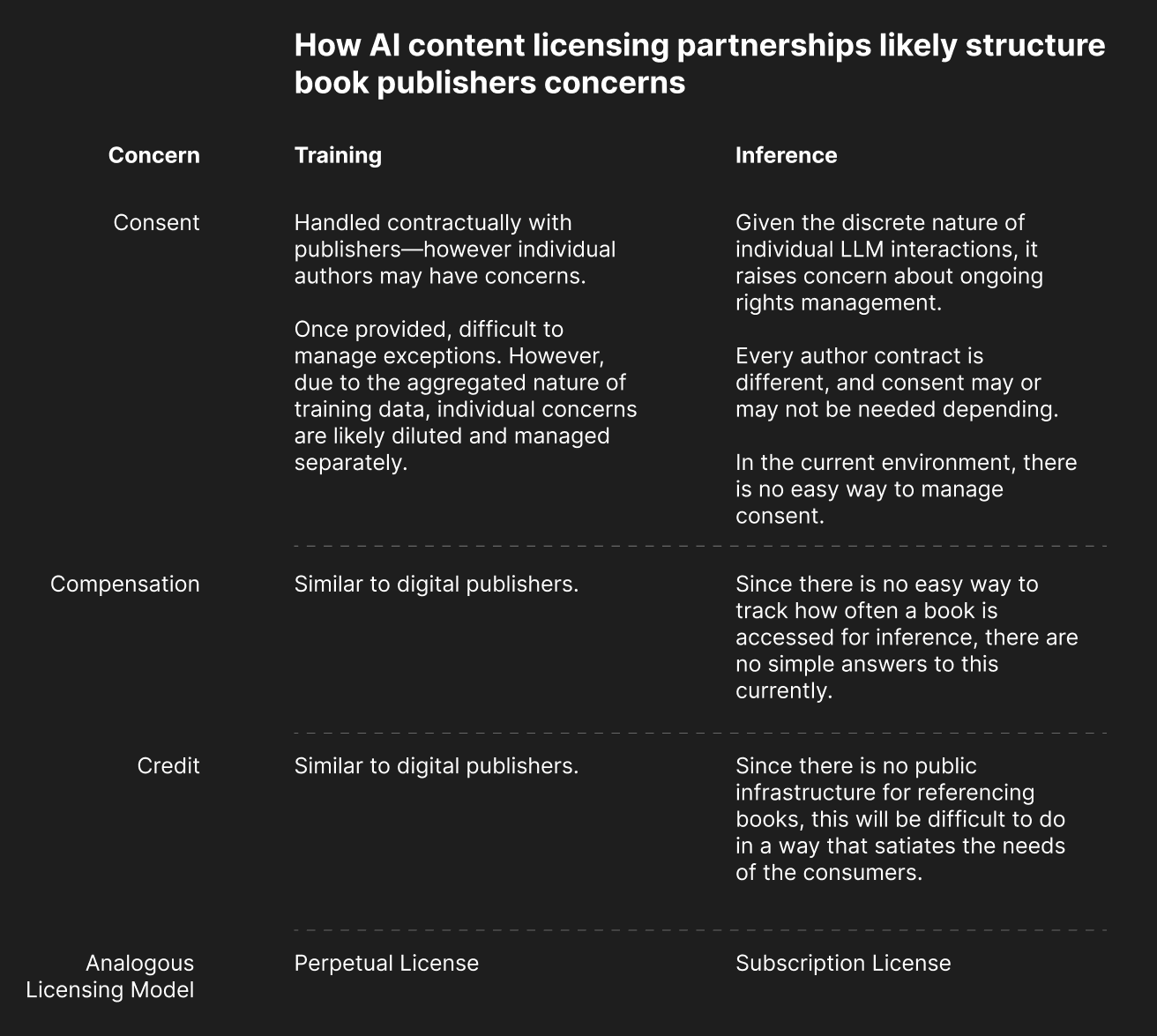

Similar to how we analyzed the digital publisher perspective, let’s answer this question by analyzing how the three C’s—credit, consent, and compensation—should be addressed in AI partnerships, first through the lens of Training and then Inference.

Book Content Licensing: Training

Not having access to the details, I’d bet this is the primary focus of the Wiley AI partnership; partially because their public announcement specifies that they licensed their data to AI companies to “train their Large Language Models (LLMs)”, but mainly due to my experience and understanding how the AI works, I have little reason to believe their partnership would include inference-based applications.

I’ll lay out my reasoning for this belief over the next few sections.

Consent and Compensation

Similar to the licensing of digital publisher data, licensing book IP for training would also likely fall under a similar perpetual licensing model.

The license for the IP should take into consideration each successive new model the AI company trains, which would make sense as the data requirements for each model shift as the researchers learn more about what type of data they actually need.

In this instance, both the consent and terms of compensation would be worked into the same agreement.

Books today are well-positioned for use in training LLMs because you need massive amounts of unstructured text with diverse language patterns, vocabulary, and context.

An LLM is designed to learn from raw natural language, which is inherently unstructured, to capture the extraordinarily complex relationships between words, sentences, and domains.

As long as the book exists in a digital format, the content can easily be extracted and used to train an LLM.

This is good news for books, because these days, nearly all books exist in some digital format.

So all a book publisher needs to do is sort out consent and compensation through a perpetual license agreement, and then dump their books into an FTP and share it with an AI company as a training “data set”.

While this “works”, the biggest challenge is once the AI company acquires the data, the book publisher loses complete control over how that data is used.

Credit

All of the same points from our analysis of Digital Publisher content apply here, which mainly point to the inherent difficulties of providing credit to source materials based on training alone; it’s the two piano challenges we discussed of (1) self-referencing and (2) how to source the training of every note — there’s no pragmatic way to do this.

However, AI companies can use formal partnerships with book publishers, like Wiley, as a way to publicly provide credit to them for their IP and convey the copasetic use of Book IP in training their models.

Book Content Licensing: Inference

Recall, experts estimate Inference will be 100x the market opportunity that Training represents.

And this is where we encounter the biggest problem: in today’s system,

There is no easy way to license and use books for AI inference.

This means there are no easy ways for book IP holders to leverage their content to participate in the largest opportunity around AI.

Let’s dissect this through the 3 concern lenses of credit, consent, and compensation — in that order.

Credit

This should be the easiest problem to solve for books.

One of the best ways to use books with an LLM is with RAG — to inject the book text into the context window and ask the LLM to reference the text and answer questions about it.

While there are legal considerations, technically performing RAG with book content is quite straightforward.

The challenge comes in how to provide credit for it.

If you are the one copying the text from the book and putting it into the LLM context window for your prompt, then you can be confident that the data the LLM references came from the book.

But what happens when you are not providing the data for RAG?

With Google and Reddit, this is the case. Users do not provide content directly; Google handles that behind the scenes.

Google has spent the last 25 years solving the biggest problems in connecting the immense depths of the data on the internet and making it easy to surface the information you’re looking for.

The reason you believe search results with Google is not because you take Google’s word for it, but because Google can instantly connect you with the source of their results, and then you can make your own determination around the validity of those sources.

But how would you do this with a book (specifically those under copyright)?

The LLM could provide you with all the information you want to know about the book; it could even give you the exact location by word position in the book — but then as the user, unless you have the book sitting in your library and you’re willing to look it up yourself, how do you verify the results?

It’s not like you can go to http://www.INSERT_BOOK_TITLE.com/chapter1 and find a reference to the source material.

The inability to generate referenceable attributes with books is a massive impediment to leveraging books for inference and providing credit.

Consent

Previously we’ve connected to the idea of a pianist not being able to cite any specific location where they learned to play, but rather they built their skills through many sources — and thus, no single source stands alone or can be highlighted.

Part of the power of inference, however, is the ability to provide a source and intentionally highlight a specific piece of content. Like Google, this is how you build credibility with the user.

The headline of the article about the Wiley announcement confirmed that authors could not choose to “opt out” of that partnership.

For Wiley, this likely won’t ultimately be an issue. In addition to their strong market reputation, they’re providing training data “soup” for AI companies, and thus individual author’s content is likely to be immediately highlighted—which minimizes the potential impact to impact any single author.

But what would happen when an LLM through inference responds to a prompt and cites a specific author who doesn’t want their data in the LLM, or a book publisher didn’t have permission contractually to integrate?

The training of the LLM is complete — the data is already incorporated into the model and like music in the pianist’s brain — the author’s data cannot be easily extracted.

Reddit does not face this same challenge with Google, because as part of the Privacy Policy you agree to when you check that box to use their site, you own the copyright of your data, but you implicitly grant Reddit an unrestricted license to use your data.

The same is not true in most contracts with authors and book publishers.

Extensive resources go into every contract, defining who has permission for every single thing, and ultimately declaring the bounds of what they can do with it.

Inference moves from handling rights in aggregate, and looks at them from an individual perspective — and these are the scenarios that the current infrastructure is very poorly equipped to handle.

Compensation

As previously mentioned, the inference model most readily relates to a subscription model.

Reddit made the change of charging users every time they accessed data over their API.

Most AI companies operate the same way — they charge users for every time they access their models via their API.

Ideally, there would be some way to track how often (and how much of) a book is referenced in inference, and the consumer would be charged based on their usage of the source material.

However, with books, it’s not clear how this would work.

As we mentioned previously, there is no way to canonically reference the content of a book, much less track the usage of that data by an LLM in a way that provides the consumer access to it.

This is one of the biggest challenges that will prevent book publishers from capturing this potentially enormous revenue stream.

The crux of the issue

I’ve summarized the key points for this section in the below matrix:

Perhaps your natural inclination after reading thus far is to dismiss the challenges. Perhaps some of these thoughts crossed your mind

“Books aren’t going anywhere, people are always going to read. This issue is not relevant.”

“Books have survived every technological advancement of the last thousand years, this one is no different.”

“So all that’s needed is a book API, and everything is fixed. Easy peasy.”

“These issues seem trivial, I’m sure someone is working on them.”

It may seem simple and obvious, but there are three reasons why I think these issues are critical and imminently relevant.

First, the biggest impact often comes from the simplest changes.

Consider Stripe, an online payment processor.

Stripe was not the first to handle online payments; there were many companies before them.

They were the first to realize that their competition’s documentation was terrible, which made it difficult for software developers those solutions.

So Stripe focused on generating excellent documentation for their product, and as a result, many developers readily adopted their product. Stripe now handles nearly 25% of all online financial transactions

Sometimes you have to acknowledge the problems hiding in plain sight before something can change. I believe this is the case with books.

With a few simple changes and a little technology, I think there could be a massive opportunity waiting for book publishers.

Second is the question of who will own the problem and bring the solution to market.

If a little technology could go a long way, then the question is who is going to build it?

The natural response is, “Well this is the publisher’s problem, they should build it”.

But if this were true, why haven’t book publishers been able to realistically compete with Amazon? Isn’t selling and distributing books to people via the Internet also their problem?

I would argue it’s because book publishers focus on what they’re excellent at — discovering, curating, and bringing big ideas to market in the form of books — and not on building technology products.

My understanding is that book publishers have a tenuous relationship with Amazon, at best, and that offered a viable alternative, most would take it.

Perhaps the solution is another tech company should own the problem and lead the conversation.

But then how do you prevent another Amazon situation from happening, where 20 years down the road when that tech company owns the books and AI ecosystem, and book publishers are forced to operate at the whim of their AI distributor?

Maybe each book publisher is left to solve this problem on their own?

Given the fractured nature and current trajectory of the industry, I believe this is likely where things are headed, and is part of the problem “…unless. something changes…”.

Finally, the opportunity at hand is massive and should not be dismissed.

If handled correctly, this is not just about licensing book data for training AI, or becoming a footnote in a ChatGPT conversation or Google search.

The opportunity here is a complete evolution for books that could unlock limitless new applications and market opportunities.

Consider Wiley.

For the last 2 years, Wiley has made ~$22MM per year licensing their content to train AI.

If the inference opportunity is 100x that of training, then they could be sitting on a $2.2BN opportunity to license their data for inference-based applications.

True, it may cannibalize some of their current business.

But even if it cannibalized all of their current business, it would represent a 10% revenue increase from where they sit today at a much higher gross margin — which would have a massive increase on their bottom line ($17MM net income in 2023; trending negative in 2024).

Let’s now turn our attention to what type of future could unlock the 100x inference opportunity for books.

This was the third article in a four-part series discussing the Future of AI and Books. If you’d like to continue reading, click the relevant link below.

- AI and the Future of Book Publishing

- The Current State of AI and Publishing

- Why the Established AI Content Licensing Model Breaks with Books

- Vision and Call to Action for the Future of Books and AI

If you’re a bit fuzzy on some of the AI terminology used in this article, I’d recommend reading my “Beginner’s Guide to Understanding Generative AI”.

.jpg)